GPT-4 Prompts & PromptBase

PromptBase originally launched in Summer 2022 as a marketplace for GPT-3 prompts. The world of AI has come a long way since then!

Today OpenAI announced the launch of GPT-4, a brand new version of their GPT series of text models.

This blog post will summarise the changes we've made across PromptBase for GPT-4, and how we plan to accommodate GPT prompts going forwards.

Combining GPT prompts

The first major change is that we have combined all GPT prompts into a single category.

Codex, GPT-3, GPT-4 and ChatGPT prompts are now all categorised as GPT, where the mode of GPT prompt (chat or completion) is determined by the type of model selected, e.g. gpt-3.5-turbo (ChatGPT - chat), text-davinci-003 (GPT-3 - completion), gpt-4 (GPT-4 - completion/chat).

We have done this to accommodate future GPT models, and so that there is no duplication of prompts across GPT models on the marketplace (e.g. the same prompt submitted as GPT-3, GPT-4, ChatGPT, GPT-5).

OpenAI has adopted a slightly confusing system for naming their models, and they threw a curve ball initially releasing ChatGPT through a web UI with no API and no underlying model name. However, now that ChatGPT is also API based (gpt-3.5-turbo), we believe this new unified system mimics OpenAI's methodology of versioning models going forwards.

We encourage users to update the GPT model of their prompt to keep it up to date with the most appropriate model for the task it's carrying out, in a similar way you'd submit an update to an iOS app on the app store.

Submitting GPT prompts

Submitting GPT prompts is basically the same as before, except for a couple of extra steps.

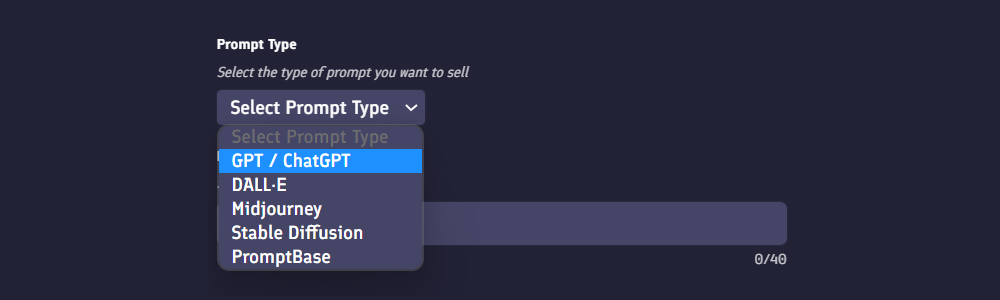

When submitting GPT prompts, select GPT / ChatGPT from the drop down menu. We still mention ChatGPT here to guide users who may exclusively be developing prompts through the ChatGPT web UI:

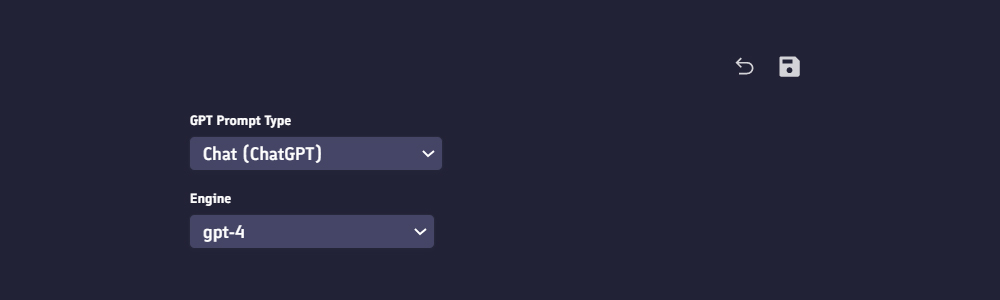

You'll then be asked if this is a completion-based prompt, or a chat-based prompt.

If you select Completion, you'll enter the familiar GPT-3 upload flow where you copy the JSON file for your prompt from the OpenAI playground.

If you select Chat, you'll enter the existing flow for ChatGPT prompts, where only the text prompt is required.

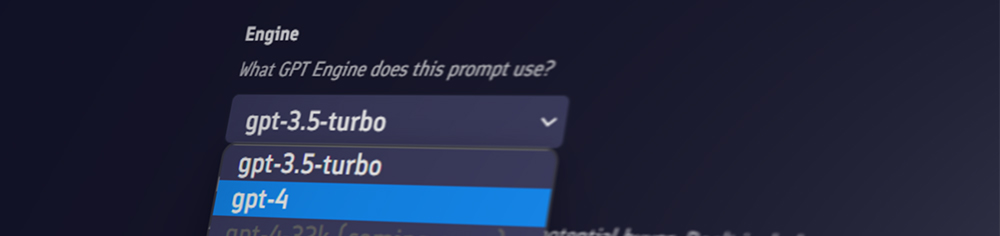

An extra step for the Chat flow is that we now require you to choose the chat model being used.

This corresponds with the options available through the ChatGPT web UI:

And also the options available through the OpenAI API.

Editing GPT prompts

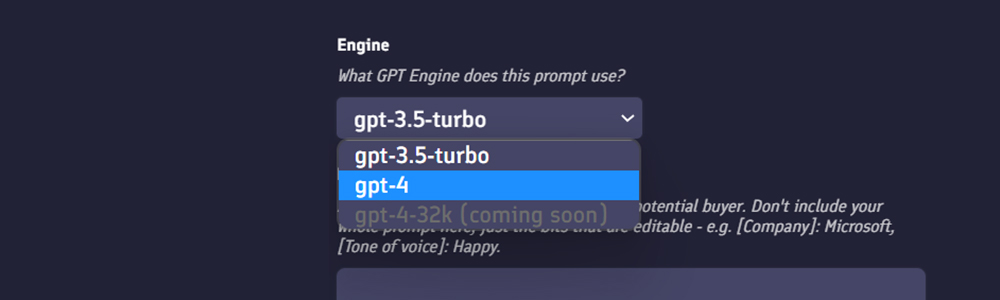

Editing GPT prompts is still the same, however we have now added the ability to freely switch your prompt between chat & completion based GPT models.

You should select the best model to carry out the idea behind your prompt, whilst also taking into account price and the number of tokens within your prompt.

Other changes

Here is a list of other changes we have made relating to GPT prompts:

- Chat based GPT prompts will now display token number & cost based on the number of tokens (instead of word count).

- All existing chat based GPT prompts have their initial model set as gpt-3.5-turbo.

- All existing prompt purchases have been updated to reflect the changes to GPT prompts.

- Chat based GPT prompts will have extra settings like temperature, top p, and frequency penalty set to the default chat mode settings in the OpenAI playground (both for existing prompts and new ones).

- Model badges on profiles become GPT instead of GPT-3 and ChatGPT.

- The profile carousel on the hire page now displays GPT prompt engineers instead of just GPT-3.

- Removed button to switch from GPT-3 to ChatGPT prompts.

- We are delaying the ability to select gpt-4-32k as a model until it becomes more widely available to the public.

Closing thoughts

Although GPT-4 prompts score higher across almost every benchmark compared to GPT-3.5, you should ensure that you have thoroughly tested your prompt first with GPT-4 before deciding to update it.

In OpenAI's own research, they found 70% of participants rated GPT-4's output as being better than previous models - which indicates that in 30% of cases upgrading to GPT-4 might reduce the output quality of your prompt.

At the time of writing this blog post, the gpt-4 model is 30X more expensive than gpt-3.5-turbo, and gpt-4-32k is 60X more expensive. Both GPT-4 models are also slower.

You should pick the model that can accomplish the idea behind your prompt in the most effective way at the lowest cost, not because the model has a higher number at the end.

That being said, we are incredibly excited to see the brand new applications GPT-4 prompts can be applied to through skilled prompt engineering!